TCA 2.1 What's new - CaaS Management Cluster options

While most of of the new features on the CaaS side in Telco Cloud Automation are utilized by Workload Clusters we will explore some new functionality for Management cluster deployemts in this post.

Telco Cloud Automation 2.1 introduces a new v2 CaaS cluster api version. New functionality includes the following:

- AKO Operator Support

- TKG extensions for Prometheus and Fluentbit

- Stretch cluster support in the UI

- A new user interface

- IPv6 support

Not everything will be reflected on management clusters which still use the v1 API and UI version but most of the features will be covered in this post (except for Prometheus, Fluentbit and AKOO which will get their own blog post shortly and the new user interface which isn't supported for Management clusters today).

So let's take a look at what changed in the interface when you deploy a new management cluster today, the template creation is still necessary but unchanged so I will skip this part here.

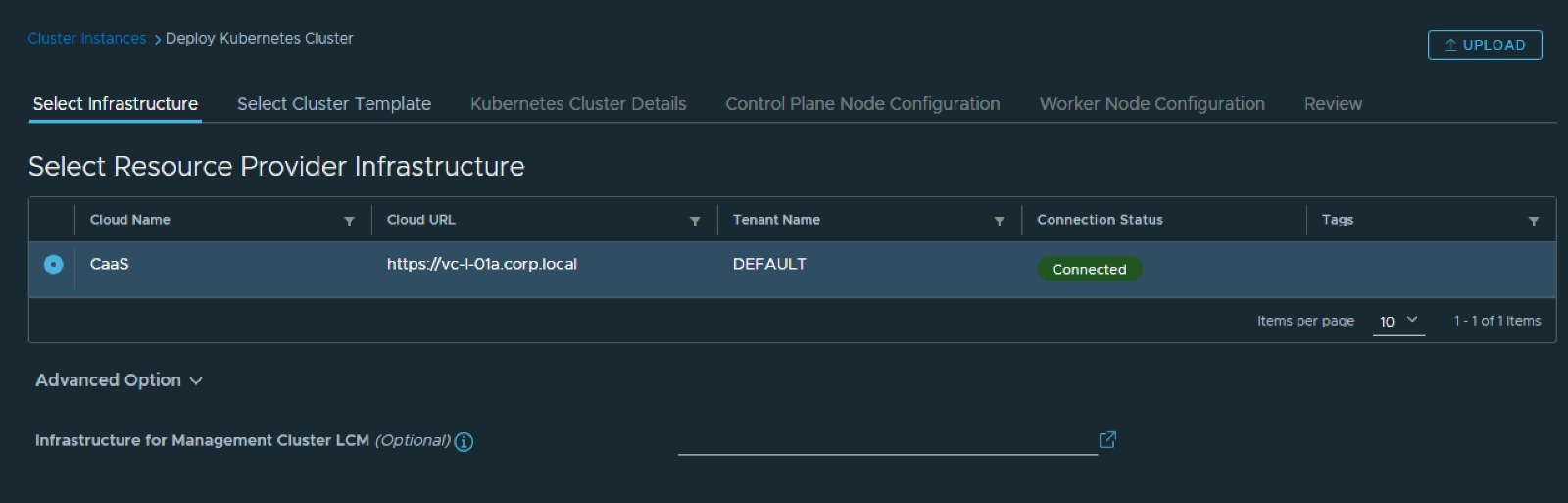

The first thing which is new is an advanced setting to choose the infrastructure for the lifecycle management of the management cluster. What this means is that there is now a GUI support for a stretched cluster configuration in which management clusters can be hosted on different virtual infrastructure, like a different vCenter, from the workload clusters. This functionality was technically already possible using API calls in previous versions, however with Telco Cloud Automation 2.1 there is now full GUI support for this functionality.

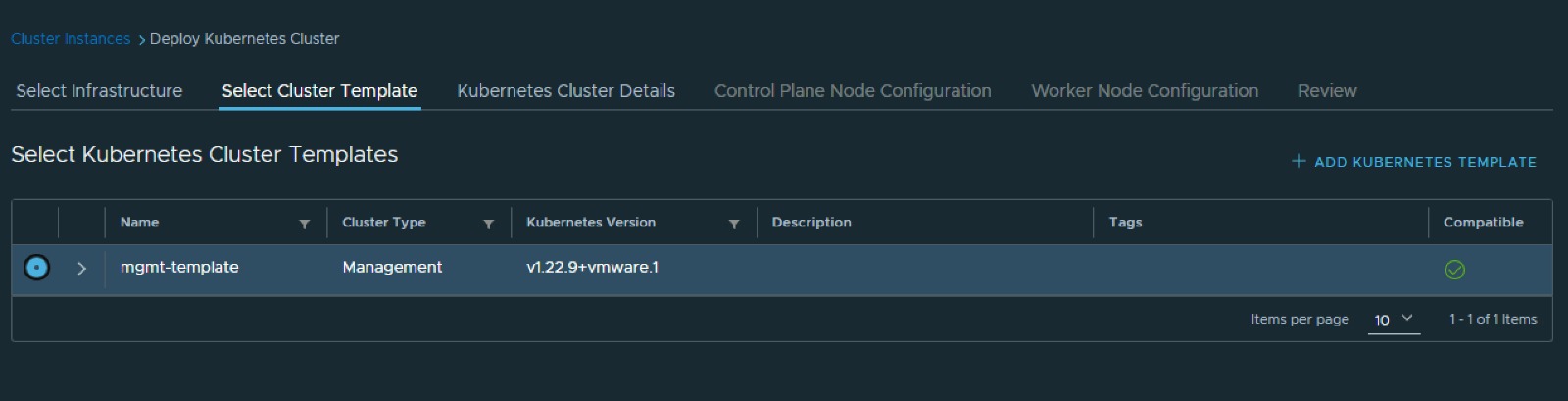

No major change happened in the next step, apart from that the Kubernetes version now gets bumped to 1.22.9 for management clusters.

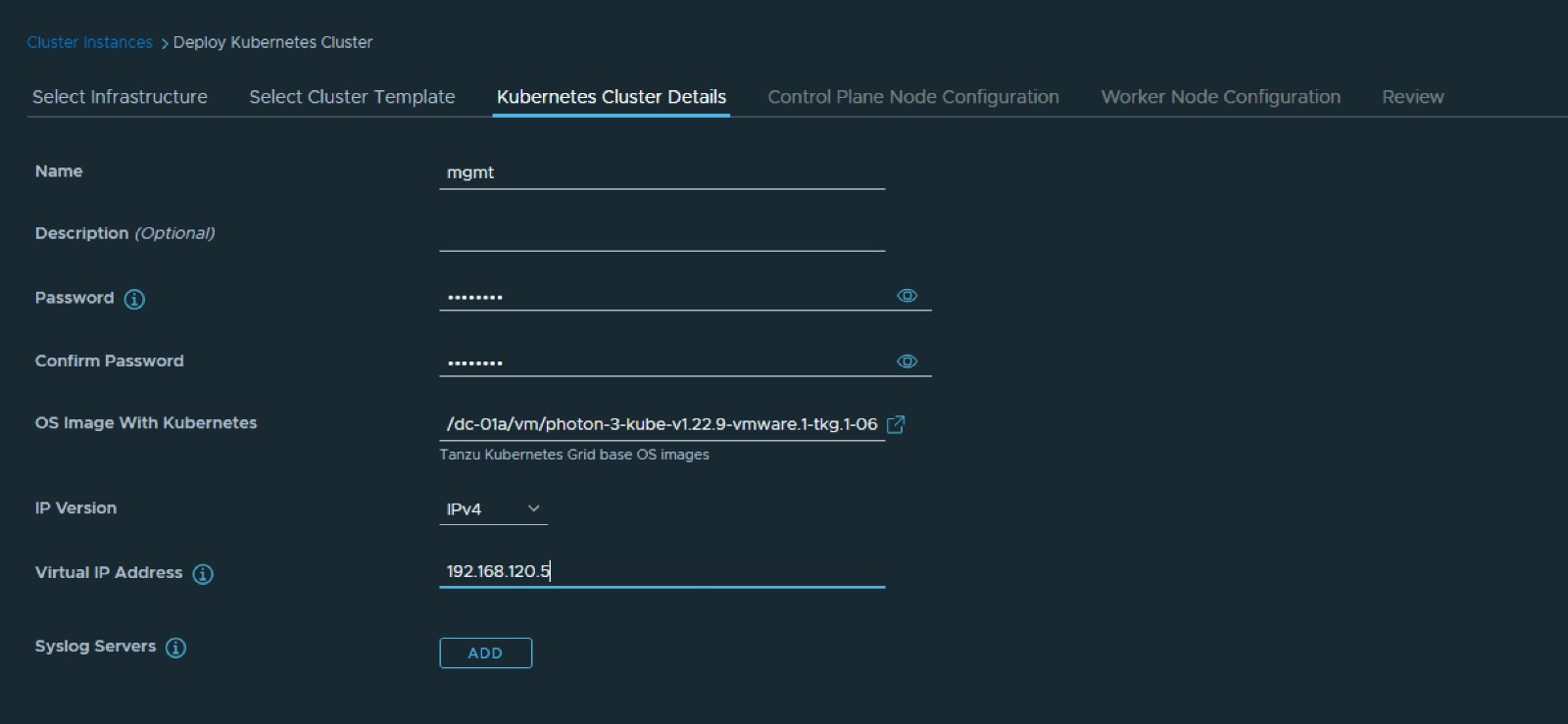

A new functionality in the Cluster Details screen is the ability to now select the IP family (IPv4 or IPv6) for a cluster. Today only single stack deployments are supported, so either IPv4 or IPv6 but not a mixed mode. The release notes list further limitations for IPv6 for now:

- When using the IPv6, the user can register components only with FQDN.

- The user must deploy DNS, DHCP, and NTP using IPv6.

- The user must deploy vCenter and VMware ESXi server using IPv6.

- VMware NSX-T and VMware vRO do not support IPv6. Any feature of VMware Telco Cloud Automation that uses these products cannot work in an IPv6 environment.

- At present, IPv6 does not support the following:

- Network Slicing

- Cloud-native deployment using user interface

- Infrastructure Automation

For me personally the biggest limitation for this at the moment is the integration of vRO which means in a pure IPv6 deployment you won't be able to run custom MOPs through workflows configured in the NF Designer but have to rely on different mechanisms, depending on the NF vendor.

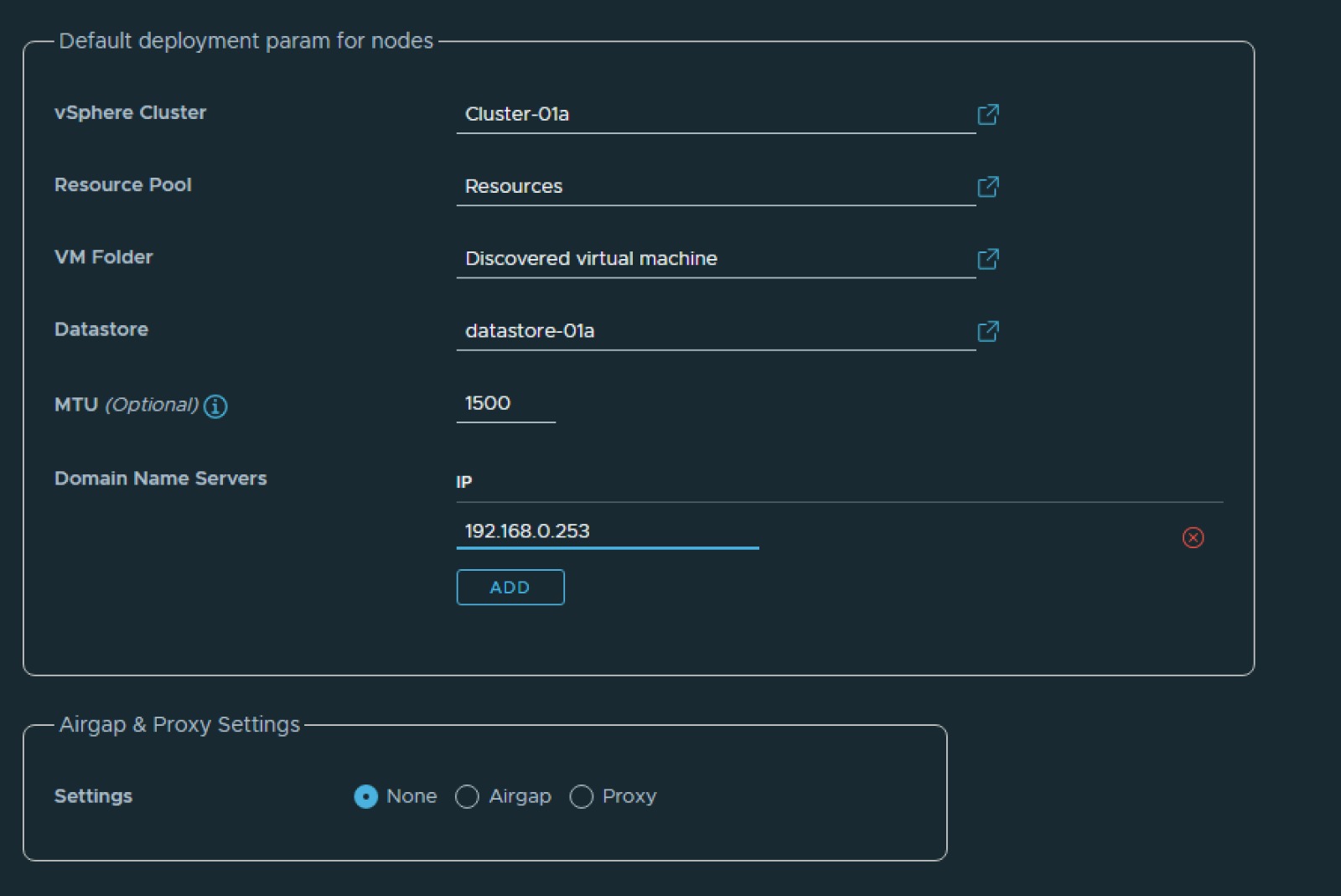

Another new addition is that you can configure a proxy server in addition to an airgap server to provide internet access for package downloads to both management and workload clusters. I noticed one minor issue with the wizard as in that the NoProxy field is marked as optional, however cluster deployemnts will fail when this field is actually left empty. It is recommended to put the cluster CIDR as well as the CIDR range for infrastructure components like vCenter Server, NSX-T and vRO in here. At the moment proxied access to Harbor is also not validated and therefore unsupported.

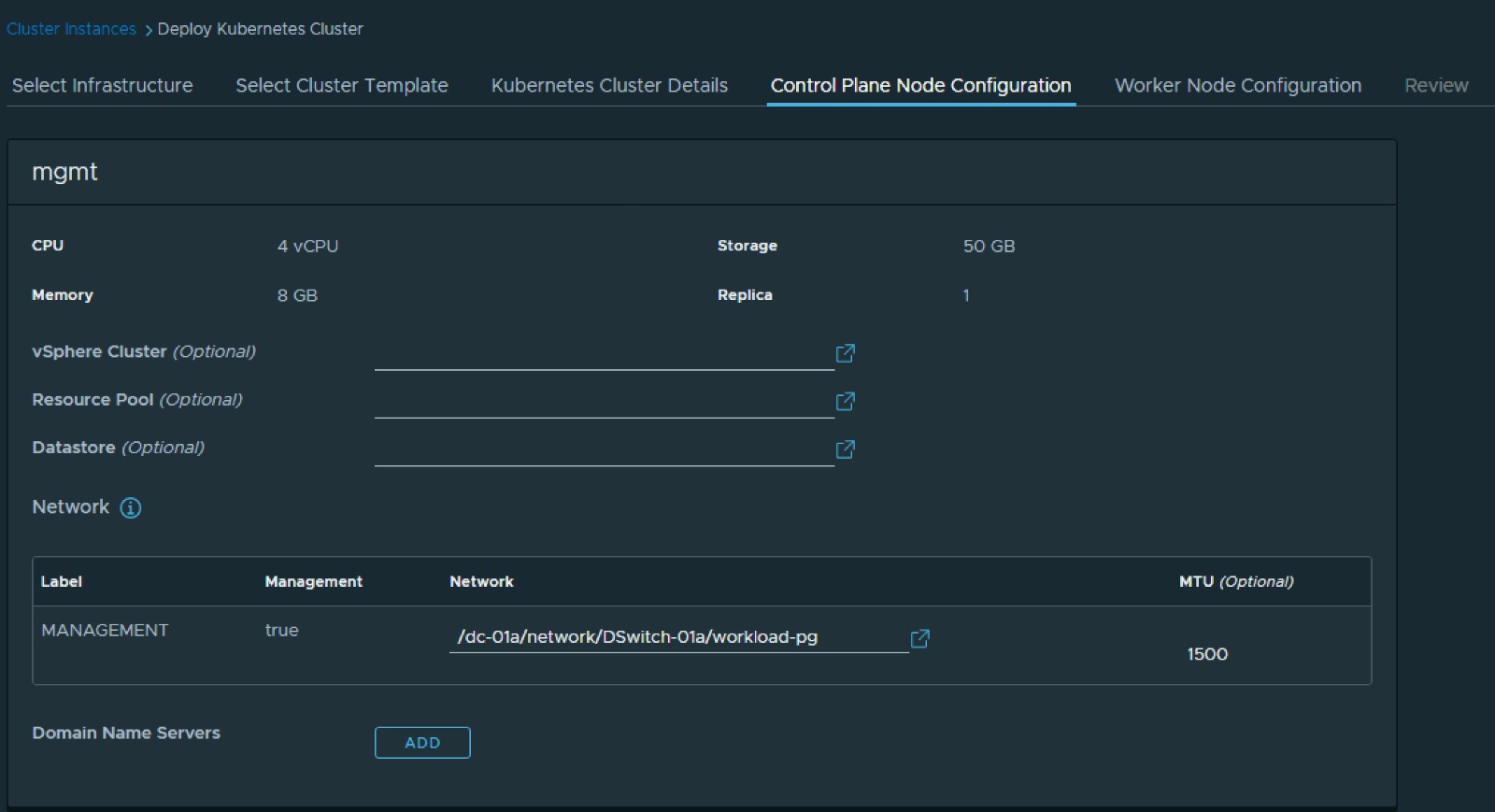

The next screen in the wizard has not changed. The previous wizard was already asking for the deployment parameters for compute and storage, if necessary and desired control plane nodes and worker nodes can have different placement to separate concerns and failure domains on an infrastructure level, as well as assigning these node types to different networks if required for security and compliance purposes.

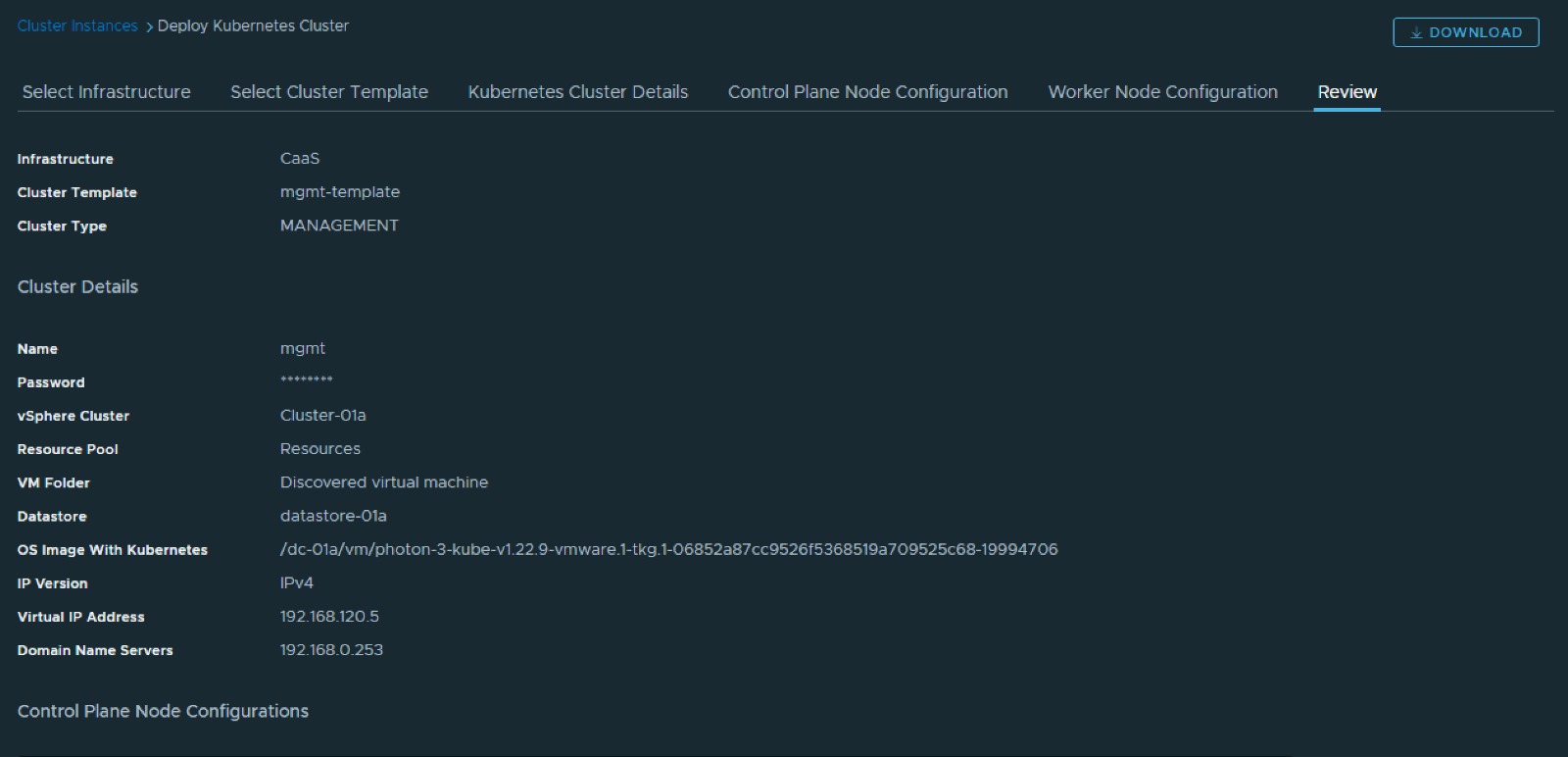

The last page of the wizard is the usual summary page which also allows you to download the json spec (which I would highly recommend.

And this basically concludes a management cluster deployment, while the wizard didn't change much some functioanlity was added, and having the ability to use Streched Kubernetes Clusters now natively without having to perform API based imports is a huge step forward.

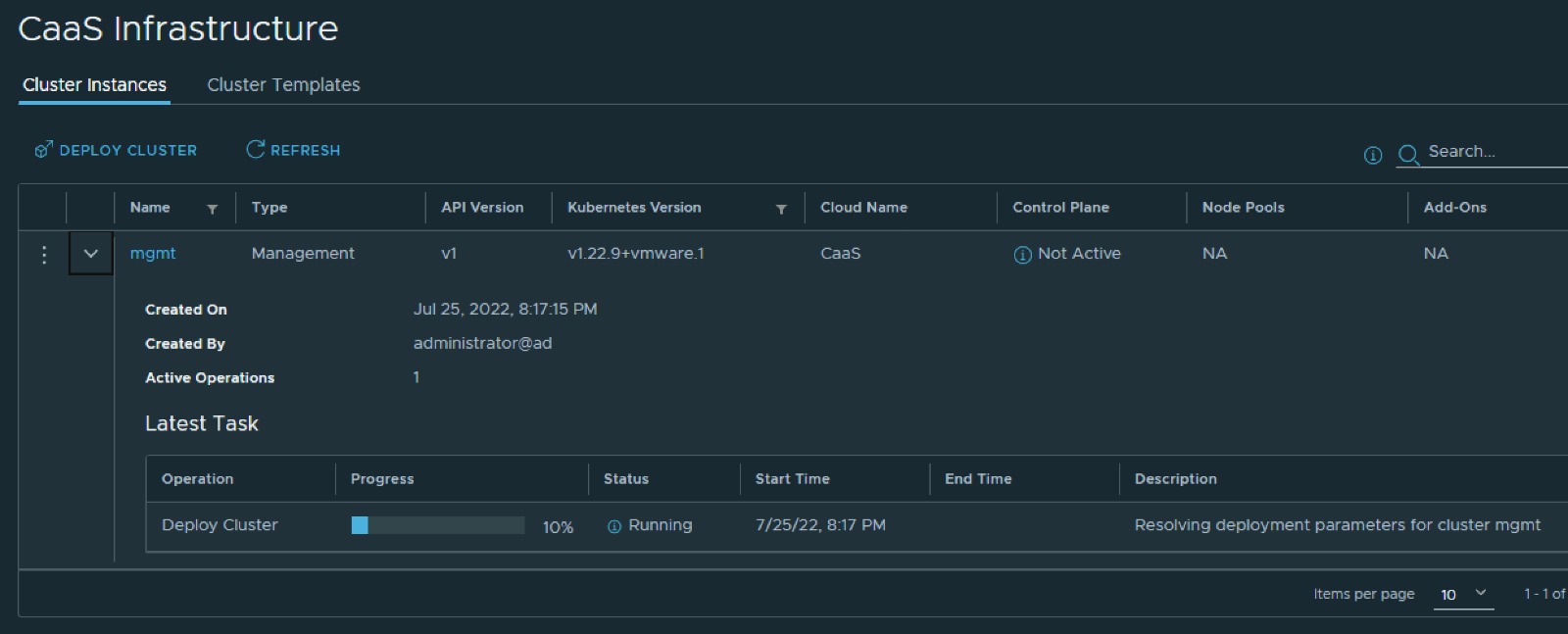

Once the deployment kicks off it can be monitored from the CaaS infrastructure details.