Hooking into the RabbitMQ bus of VIO

Overview

If you are using vCloud Director or vRealize Automation you will know that both products allow for custom extensibility via similar frameworks by being able to utilize a message bus to trigger workflows based on events. This blog post will explore how to achieve a similar outcome using VMware Integrated OpenStack.

Exposing the RMQ bus to external sources

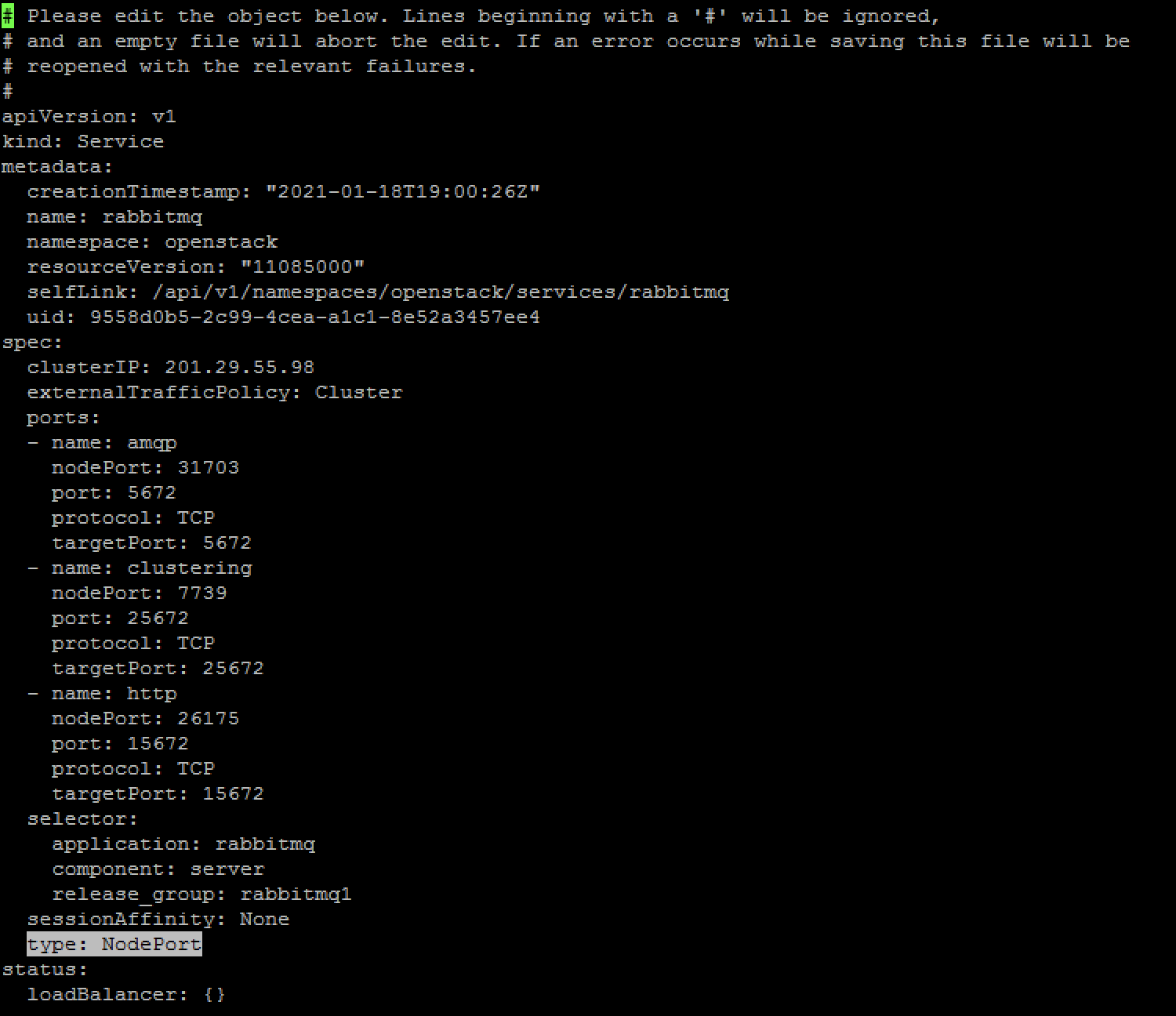

By default the RabbitMQ bus is configured for internal consumption only in a VMware Integrated OpenStack deployment. The service is configured using ClusterIP which we can easily change over to NodePort using the following command.

1osctl edit service rabbitmq

The line which requires changing is marked in the figure below.

The Kubernetes services will update random ports automatically in the Ports sections for amqp, clustering, and http. If specific ports are desireable then these can be added either manually during the first edit, or edited again to force exposing the service through a specific port. Of interest here are mainly the amqp and http section to expose both the message bus itself, as well as the administrative interface.

Verifying external connectivity

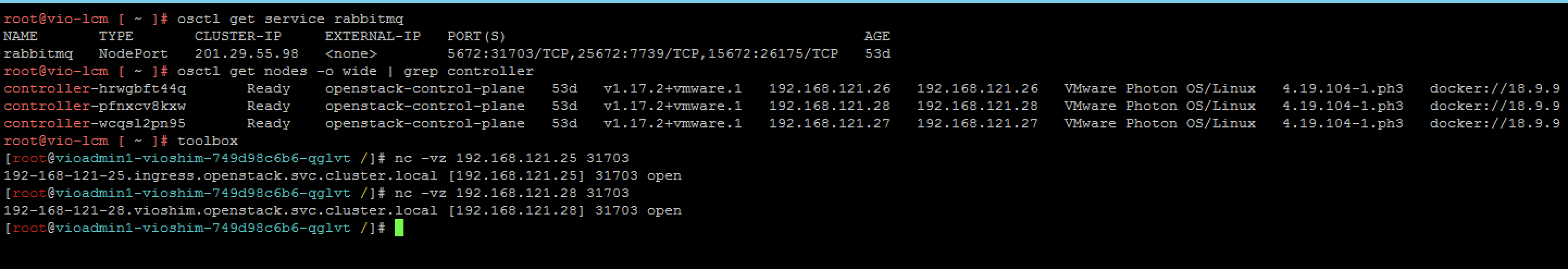

A quick verification of succes can easily be performed from the toolbox container using the LCM shell which has netcat installed to verify the exposed port can be externally accessed through the controller's IP as shown in the figure below.

We can see that the RabbitMQ port of 5672 is mapped externally to port 31703 by running the following command:

1osctl get service rabbitmq

The Controller external and internal IPs can be shown with the following command:

1osctl get nodes -o wide

During the initial VIO setup a VIP is also configured for this network, in this lab the VIP is 192.168.121.25. Dropping into the toolbox container is simply done by running the following command:

1toolbox

And from there we can test the connectivity using netcat and the controller IPs or the VIP as with the following example:

1nc -vz 192.168.121.25 31703

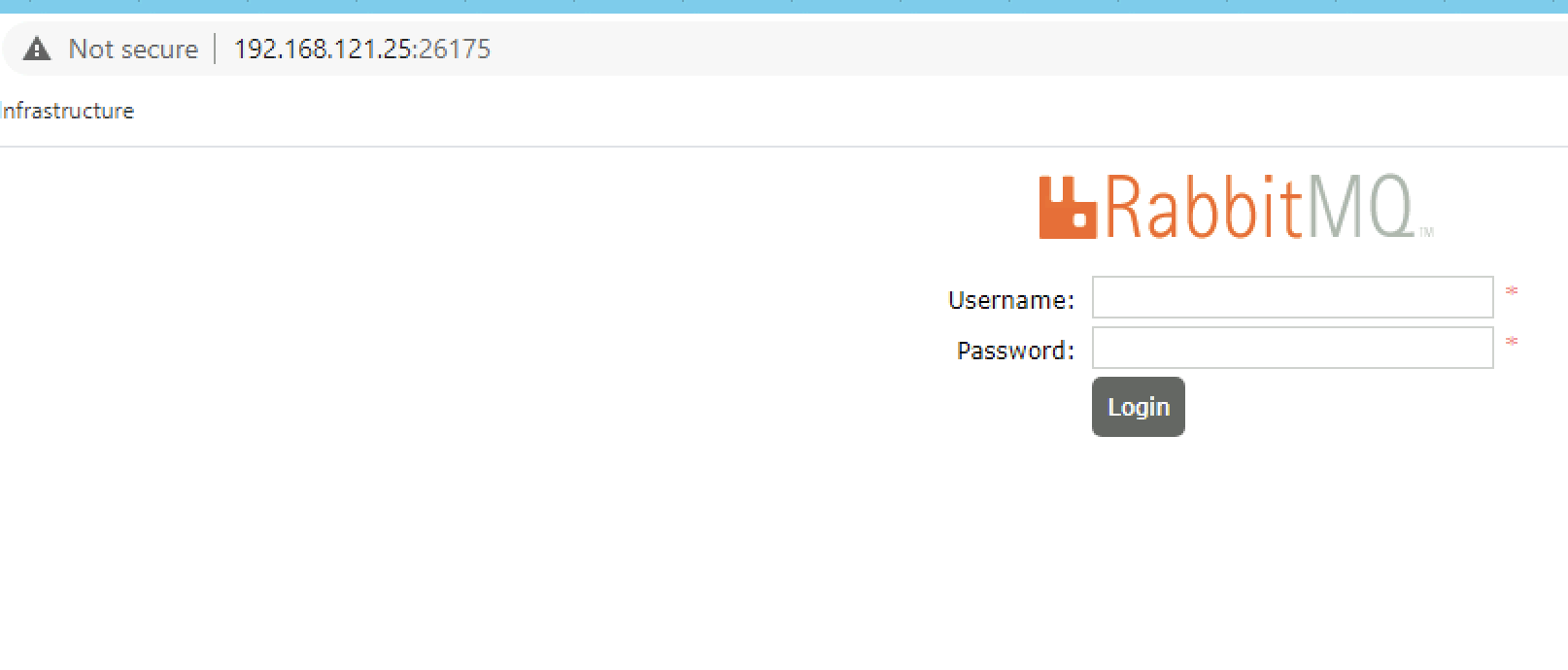

We can also test the management interface by opening up a browser and connect to the VIP (192.168.121.25) on the port which is mapped to the internal http port of 15672, which is external port 26175 in this lab as per figure 1 above.

The login credentials for the service are "rabbitmq" with a password which can be decrypted using the following command:

1kubectl -n openstack get secret managedpasswords -o yaml | awk '/rabbit/{print $2}'| base64 --decode | xargs echo $1

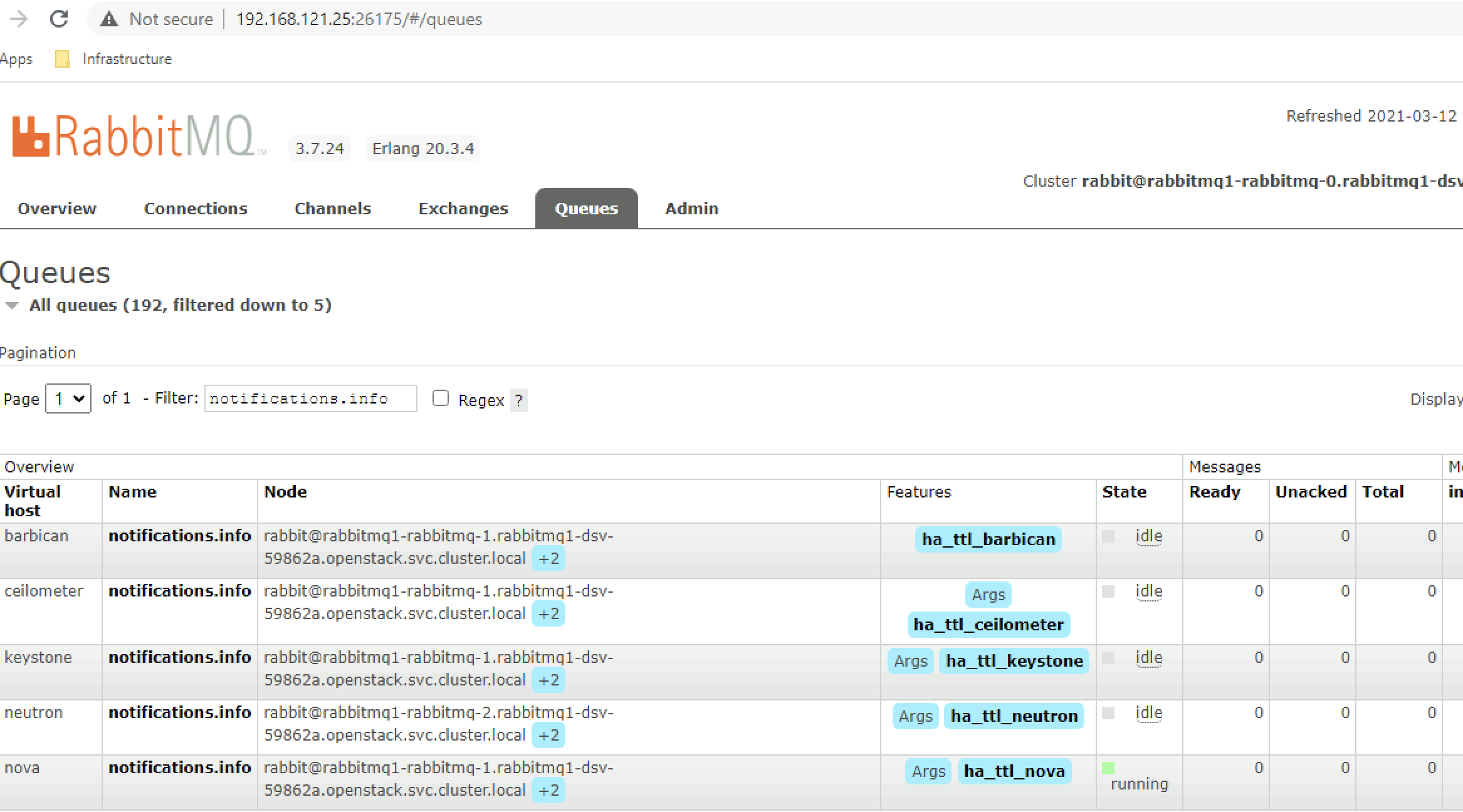

The internal structure exposes several vhosts (one per OpenStack project) of which usually the notifications.info queue is most relevant to grab messages from. E.g. in vhost nova this queue would receive notifications about instance creation, deletion or modification.

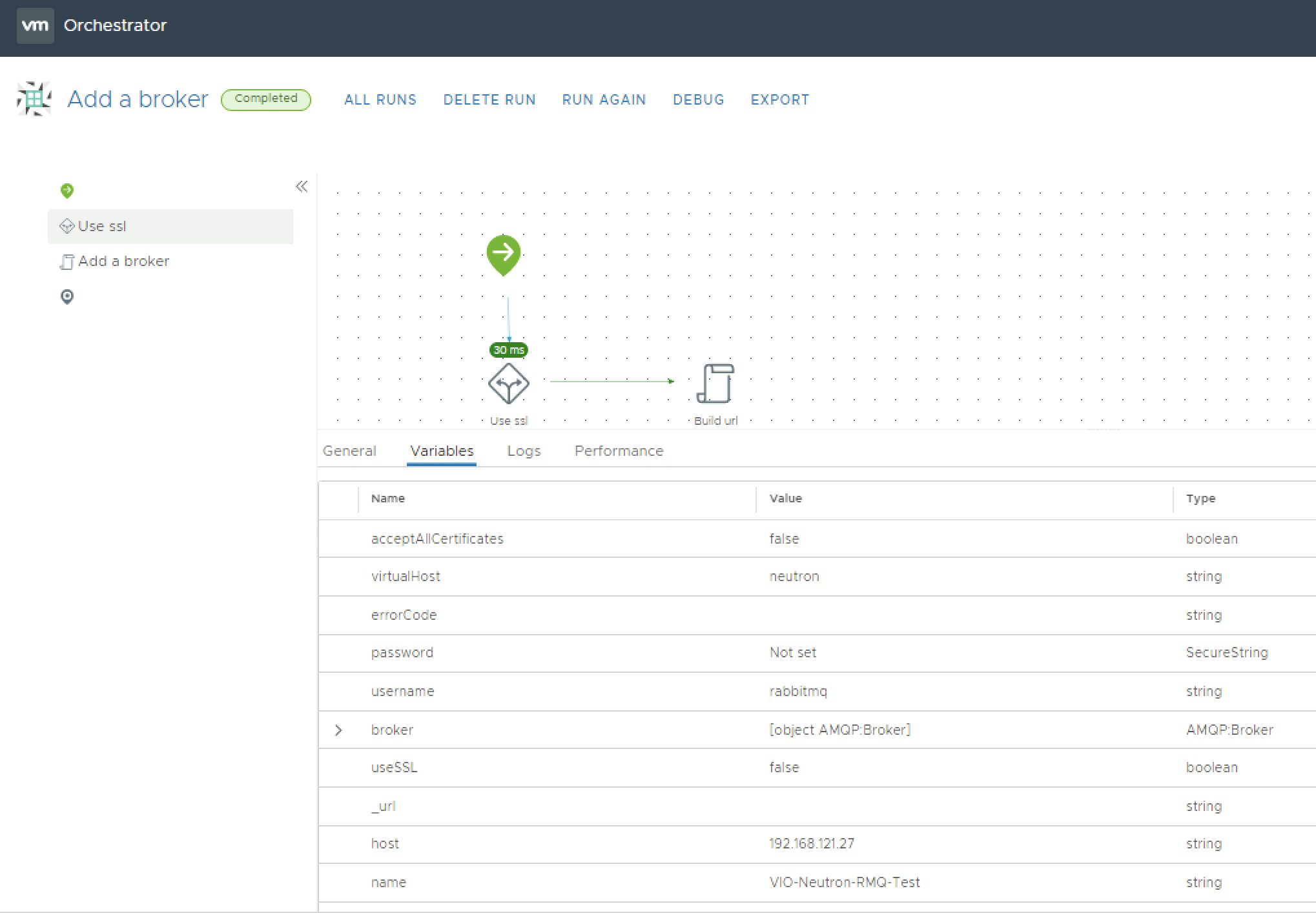

Configuring vRO to read messages

The first step to be able to consume messages from within vRO of the message bus is to configure the AMQP broker endpoints in VIO. One endpoint is required per vhost and in most instances you would be interested in at least nova, neutron and probably cinder as well if you are looking at asset tracking or change mangement use cases for automated system updates on object CRUD operations. One thing to note is to configure the vhost without a slash, just give it the name of the vhost without any symbols. This workflow will show successful runs no matter if the connection is actually successful or not, the next steps will allow us to test the actual connection then.

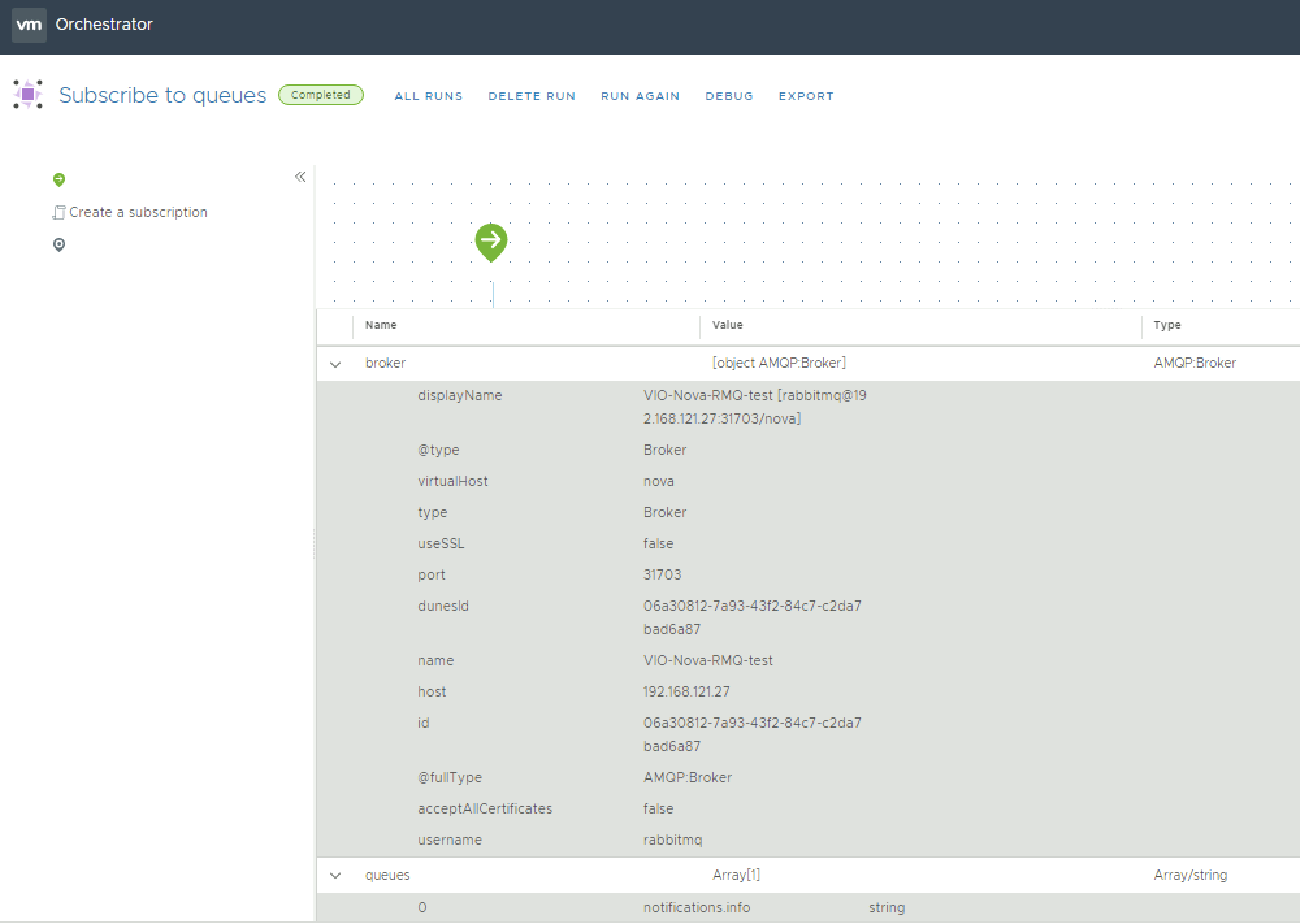

With the broker connection configured we now want to set up a subscription to this particular queue, e.g. the notifications.info queue on the neutron or nova vhost.

To achieve this we will run the "subscribe to queues" workflow, this takes an existing broker connection (which we configured with the previous workflow) and the actual queue name (notifications.info in essentially all use cases) as input parameters. An example run, this time to subscribe to a nova broker is shown in the figure below.

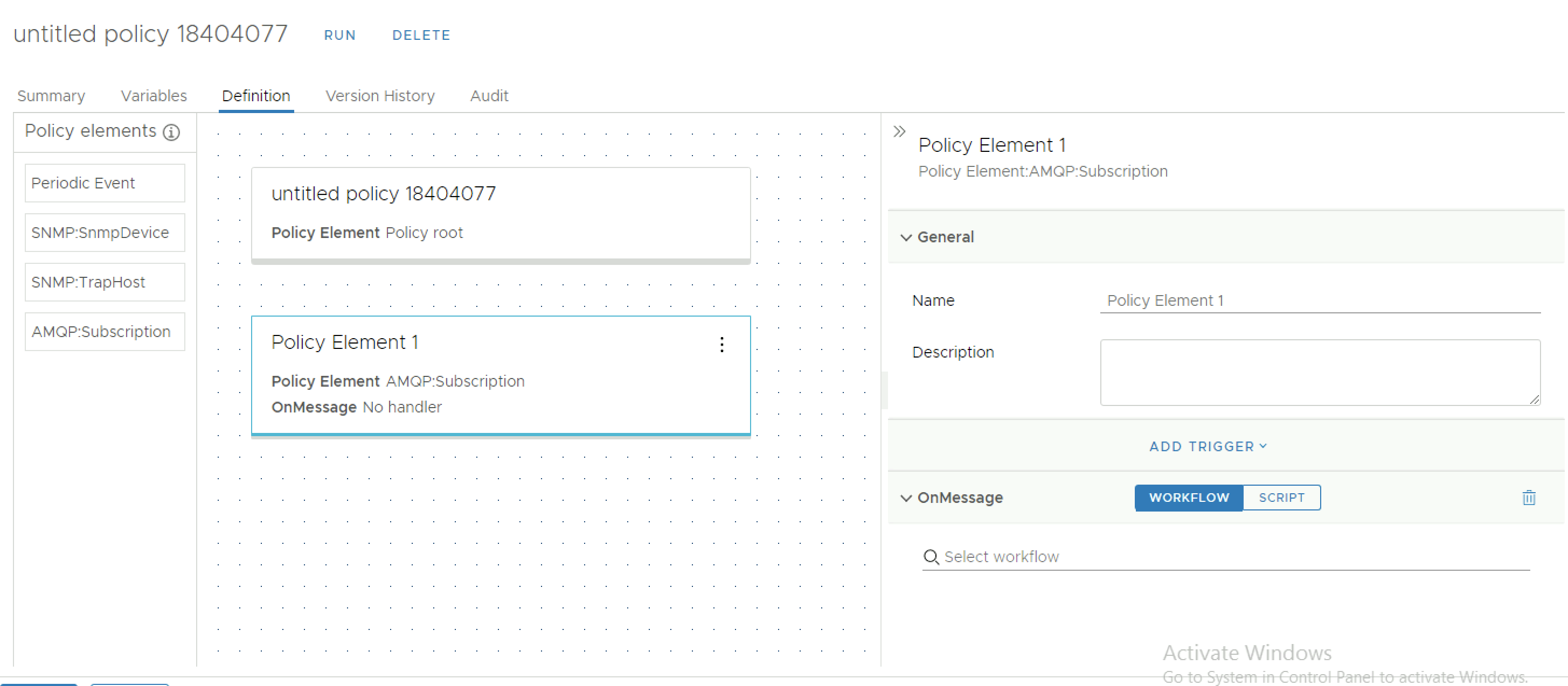

The last step in the puzzle is to configure a policy which consumes our subscription, to be able to trigger either logging of messages from the queue or to kick off another workflow in which the message can be evaluated and other tasks, e.g. other workflows or actions, can be triggered based on the contents of a message, e.g. kick off an external API call to an IPAM system, an asset tracking database, a monitoring system, etc. The figure below shows the simple example of running the standard subscription based policy which let's you configure a custom workflow to run on the OnMessage event received on this particular queue. After creation this policy then can be run and scheduled to start with the vRO services.

To quickly show how an instance update would look like on the nova vhost I have used the standard Subscription policy which simply logs to the vRO default system logs and modified an instance, the incoming message can be seen on the Logs tab, so in my consuming policy I could filter for an event_type of "compute.instance.update" to filter for this type of event only in a workflow and then kick off other workflows or actions based on this and other information contain in the message to update external OSS systems.

Summary

With a few simple steps, we have managed to expose the RMQ bus in a VIO installation for external consumption, created the necessary broker connections through vRO, subscribed to the relevant queues and created a policy which trigger on receiving the messages in that queue to further evaluate them which allows us to integrate into external systems for asset tracking, change management, IPAM and various other use cases.